The Case Against Me

The strongest argument against my position isn't about cheating. It's about cognition. If a student uses AI to write an essay, did they learn to think? A doctor using AI diagnostics still needs to understand anatomy. A lawyer using AI research still needs to understand legal reasoning. The question isn't whether AI can produce the output. It's whether the human developed the underlying capability.

We've been here before with calculators. The educators who warned that students would lose number sense weren't entirely wrong. Many did. The question is whether that tradeoff was worth it. With AI, the stakes are higher because the capability gap is larger.

This is a real concern. I just think it points in a different direction than most schools are going.

The Real Question

Let me be precise about what I'm arguing. We still teach kids multiplication even though calculators exist. That's right, and some foundational knowledge needs to be in human heads. We should use in-person testing and oral examination to verify that students can actually think and communicate. That's also right.

But here's what's coming: within 12-18 months, most knowledge workers will be working with AI all day long. Not occasionally. Not as a novelty. As the default mode of work.

Schools need to overhaul how courses are taught to prepare students for this reality. Not "all AI, all the time" but recognizing that teaching while ignoring AI is like teaching creationism after Darwin. You can do it, but you're preparing students for a world that no longer exists.

The International Baccalaureate has taken a restrictive approach that treats AI as a threat to be managed rather than a capability to be developed. That's not protecting academic integrity. That's institutional denial dressed up as policy.

Arnold Kling frames this as adversarial versus cooperative education. Schools treating students as adversaries who need to be policed will lose to schools treating AI as a tool students need to master.

Pattern Recognition

I've seen this game before and the players are predictable.

Incumbent institutions defend the status quo because change is expensive. Faculty would need retraining. Assessment systems would need rebuilding. Accreditation standards would need updating. It's easier to ban the problem than to solve it.

Students adopt tools that make their lives better, regardless of official policy. They're not cheating. They're being rational. If your future employer expects AI fluency, why wouldn't you develop it now?

Employers are the wild card. They don't care about academic integrity. They care about results. Google doesn't ask if you used AI to write your code. Goldman Sachs doesn't care if you used AI to build your financial models. They care if the code works and the models predict.

The equilibrium is unstable. Once the first wave of AI-trained students hits the job market and significantly outperforms their traditionally-educated peers, institutional resistance collapses overnight. I expect this within 18 months.

How I Got Claude-Pilled

Ten months ago I started using ChatGPT's o3 reasoning model. That was the first time the AI kept up with me. It didn't just retrieve information. It extended my thinking. It felt like working with a partner rather than querying a database.

Then I learned about agents, AI systems that don't just answer questions but actually do work. They research topics. They write drafts. They run analyses in parallel. I started understanding what "working with AI" actually meant in practice.

Now with Opus 4.5, it's a jetpack. I can ask it to build things, not just explain things. "Create a financial model." "Analyze this dataset." "Help me think through the game theory of this situation." The productivity gap between people who work with AI and people who don't is already massive and widening daily.

The rest of the world will get here in 6-12 months. The students graduating in 2027 who never learned to work this way will be starting their careers already behind.

The Adoption Curve Is Steepening

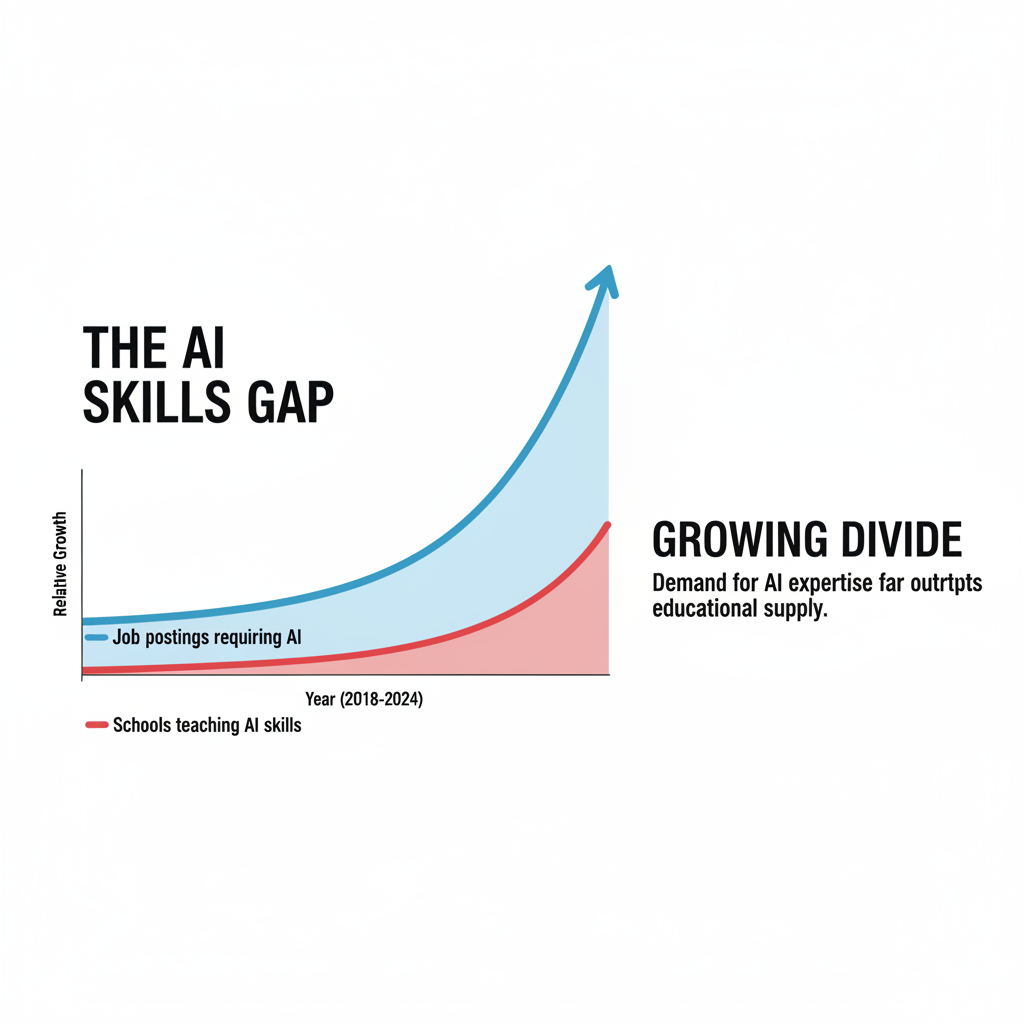

The data backs this up. GitHub reports that 92% of developers now use AI coding tools. McKinsey found that lawyers using AI complete research tasks 30% faster with 25% higher quality. Medical residents using AI diagnostic tools are outperforming experienced doctors on pattern recognition tests.

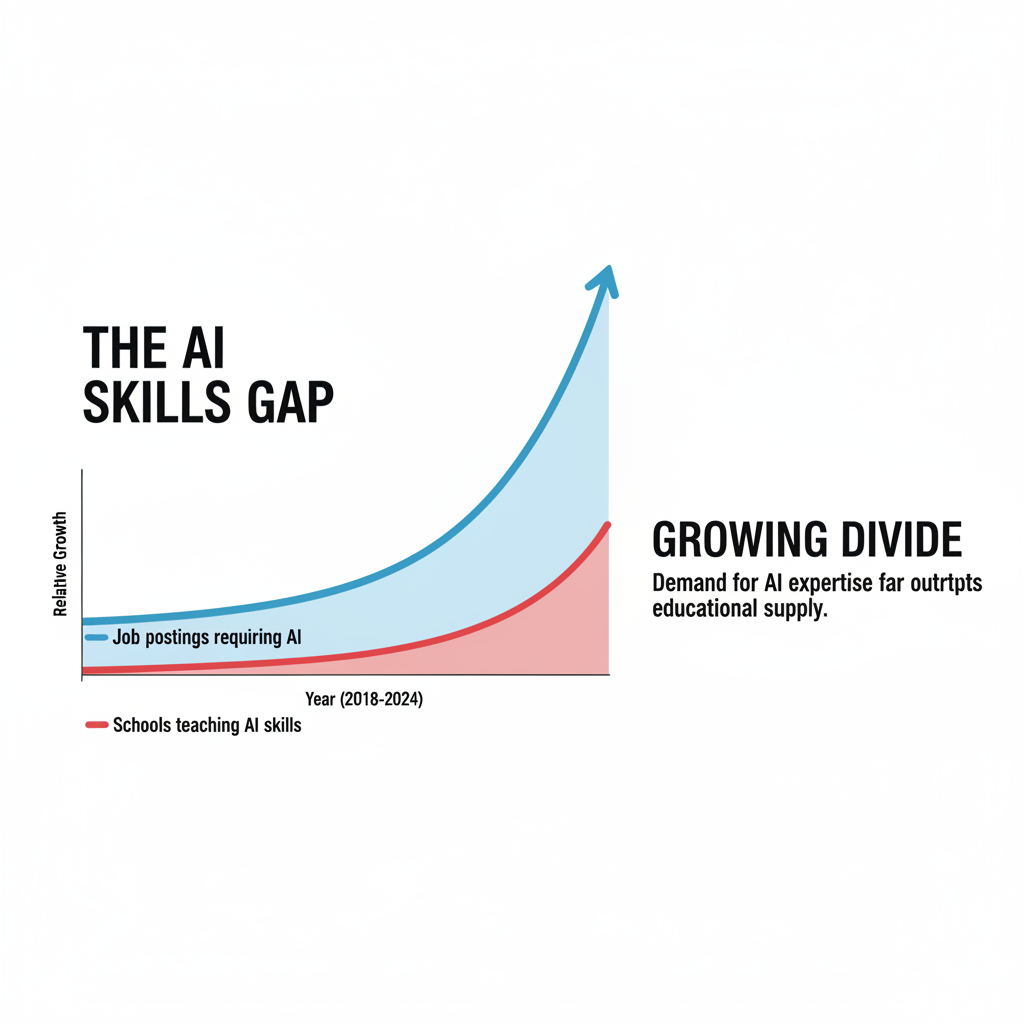

Here's what matters: MIT just launched a certificate program in "Agentic AI" that teaches students to build AI systems that can act autonomously. Johns Hopkins is piloting AI collaboration courses. Stanford is integrating prompt engineering into computer science curriculum.

Meanwhile, most high schools are debating whether to allow AI at all.

The competitive gap is widening. South Korea announced a $2 billion AI education initiative. Singapore is training teachers in AI pedagogy. China views AI literacy as a national security priority.

We're bringing calculators to a computer fight.

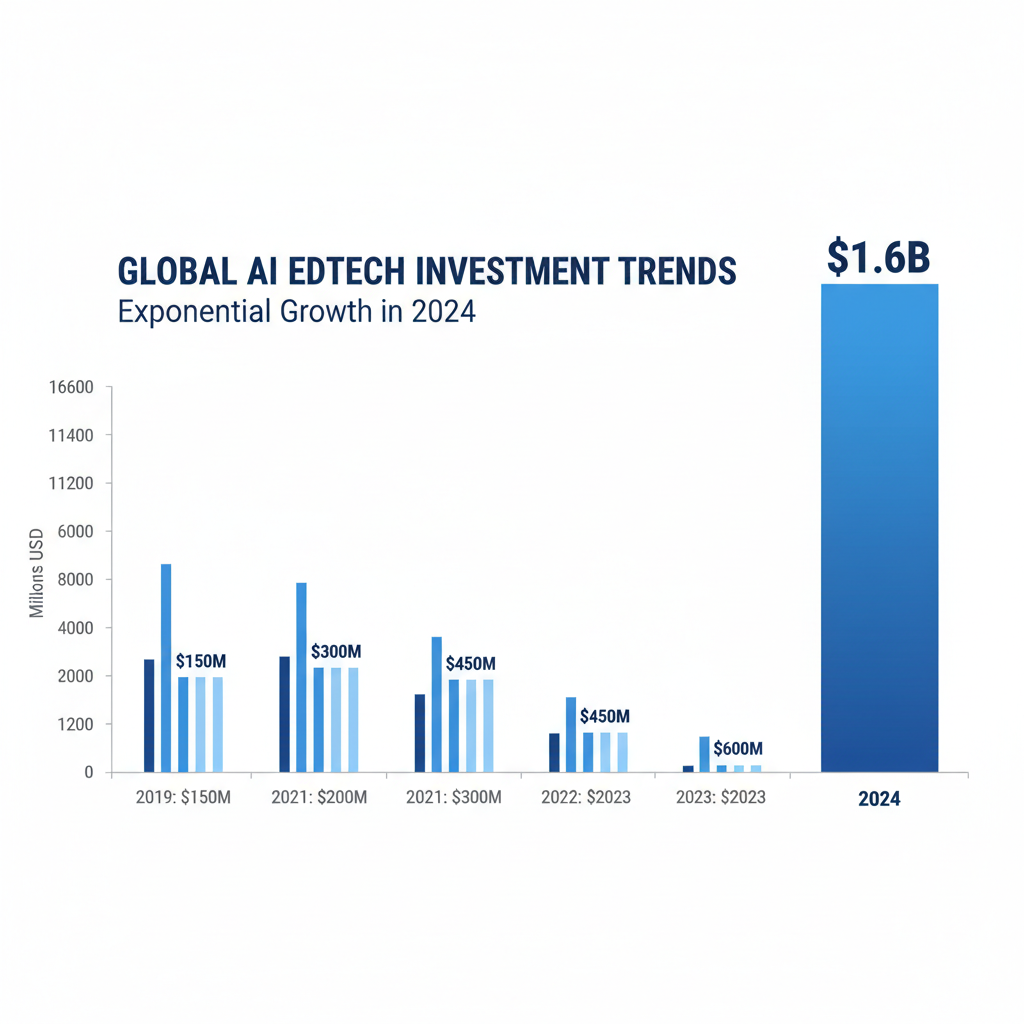

Making Book

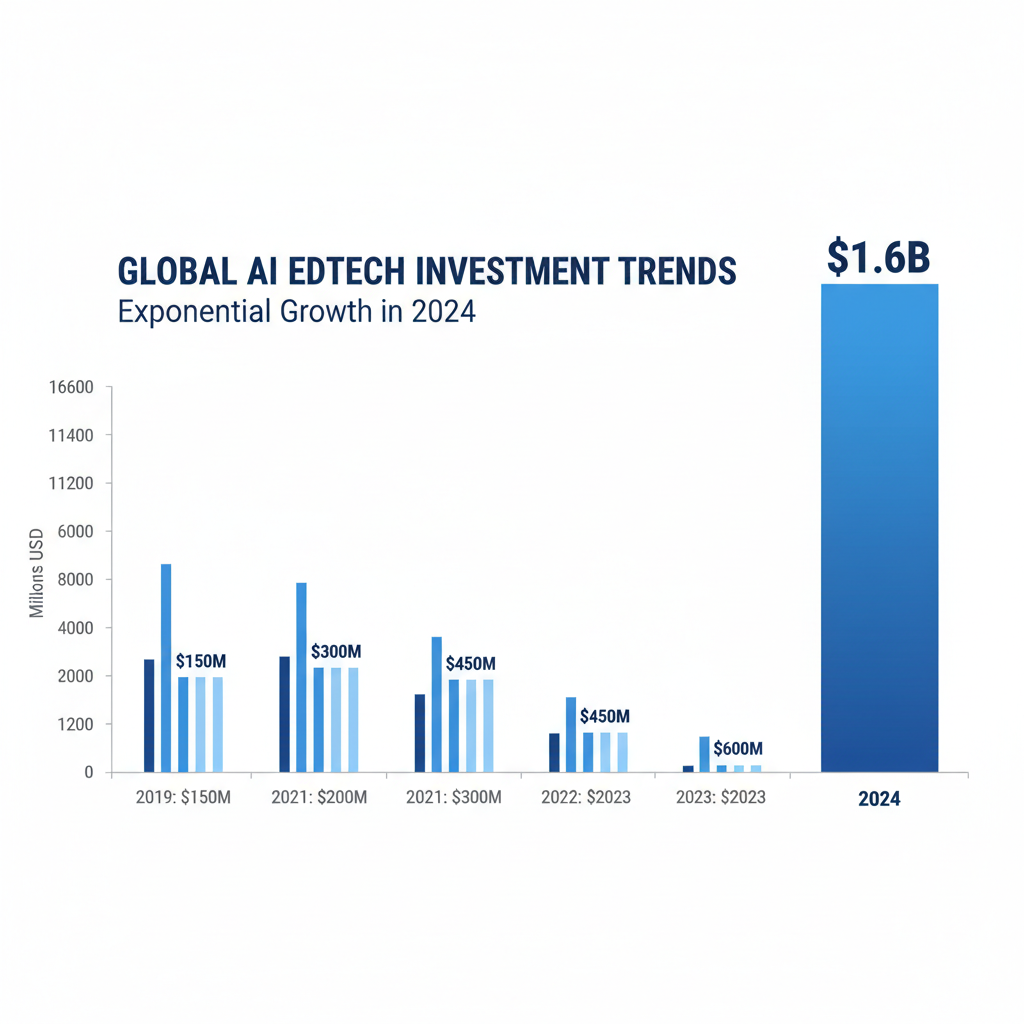

The money tells the story. EdTech startups focused on AI tutoring raised $1.6 billion in 2024, more than the previous five years combined. Job postings requiring AI skills tripled in two years. Early data from AI tutoring programs shows significant improvements in learning outcomes compared to traditional instruction.

My bet: By 2027, students from schools that banned AI will be unemployable in knowledge work. Not because they're less smart, but because they never learned to collaborate with the most powerful tools ever built.

Schools have 18 months before employment data makes their position untenable. The first cohort of AI-native students will graduate soon. When they dominate job placement rates, and I expect they will, every parent will demand AI curriculum and every university will scramble to catch up.

The Bottom Line

The cheating debate isn't about academic integrity. It's about institutional comfort. Schools would rather police AI use than admit they don't know how to teach AI skills.

But students have already decided. They're using AI regardless of policy because they understand the stakes. The question isn't whether AI belongs in education. The question is whether education wants to remain relevant in an AI world.

Download Claude Code and start asking it things. You'll stop arguing after that.

P.S. This essay was written using AI collaboration for researching data, testing arguments, and refining prose. Not because I'm lazy, but because it's 2026 and my job is to think clearly, not to perform cognitive theater. The AI didn't write this, but it helped me write it better and faster than I could have managed alone. Which is exactly the point.